What Is a Code Interpreter?

A code interpreter is a program that directly executes instructions written in a programming language without requiring them to be compiled. Unlike compilers that convert high-level code into machine language, interpreters work by parsing and executing the source code line-by-line. This can be advantageous for debugging and development as errors can be caught and corrected in real-time.

Interpreters are important in situations that require dynamic analysis and quick iterations. They allow for interactive debugging, making it easier for developers to test small code snippets and understand program flow. A new use case for code interpreters is large language models (LLMs). An LLM like GPT-4 or Google Gemini, when combined with a code interpreter, can write its own code and execute it in a sandbox environment, which enables innovative capabilities.

It’s important to realize that since code interpretation happens at runtime, it can be slower than pre-compiled execution.

This is part of an extensive series of guides about [AI technology].

In this article:

- Traditional Applications of Code Interpreters

- The Role of Code Interpreters in LLMs: Key Use Cases

- Notable Code Interpreter Tools

- How to Choose LLM Code Interpreter Tools

Traditional Applications of Code Interpreters {#traditional-applications-of-code-interpreters}

Here are some common uses of code interpreters:

- Testing code snippets: Developers often need to verify the behavior of small sections of code to ensure they function as expected. By using interpreters, these snippets can be executed in isolation, providing immediate feedback and supporting iterative development.

- IDE integration: Some online coding platforms and integrated development environments (IDEs) have built-in interpreters, allowing users to run and modify code snippets on the fly, with real-time error checking.

- Writing scripts: Code interpreters are indispensable for writing scripts, which are small programs or routines designed to automate tasks. Scripting languages like Python, Ruby, and JavaScript benefit greatly from interpreters because they allow scripts to run immediately after writing, without the need for a compilation step.

- Debugging applications: Interpreters provide the ability to execute code line-by-line, which is instrumental in identifying and fixing errors in the code. This step-by-step execution helps developers understand the code’s behavior and locate bugs more efficiently compared to compiled code.

The Role of Code Interpreters in LLMs: Key Use Cases {#the-role-of-code-interpreters-in-llms-key-use-cases}

Large language models rely on code interpreters for the following functions.

Data Analysis and Visualization

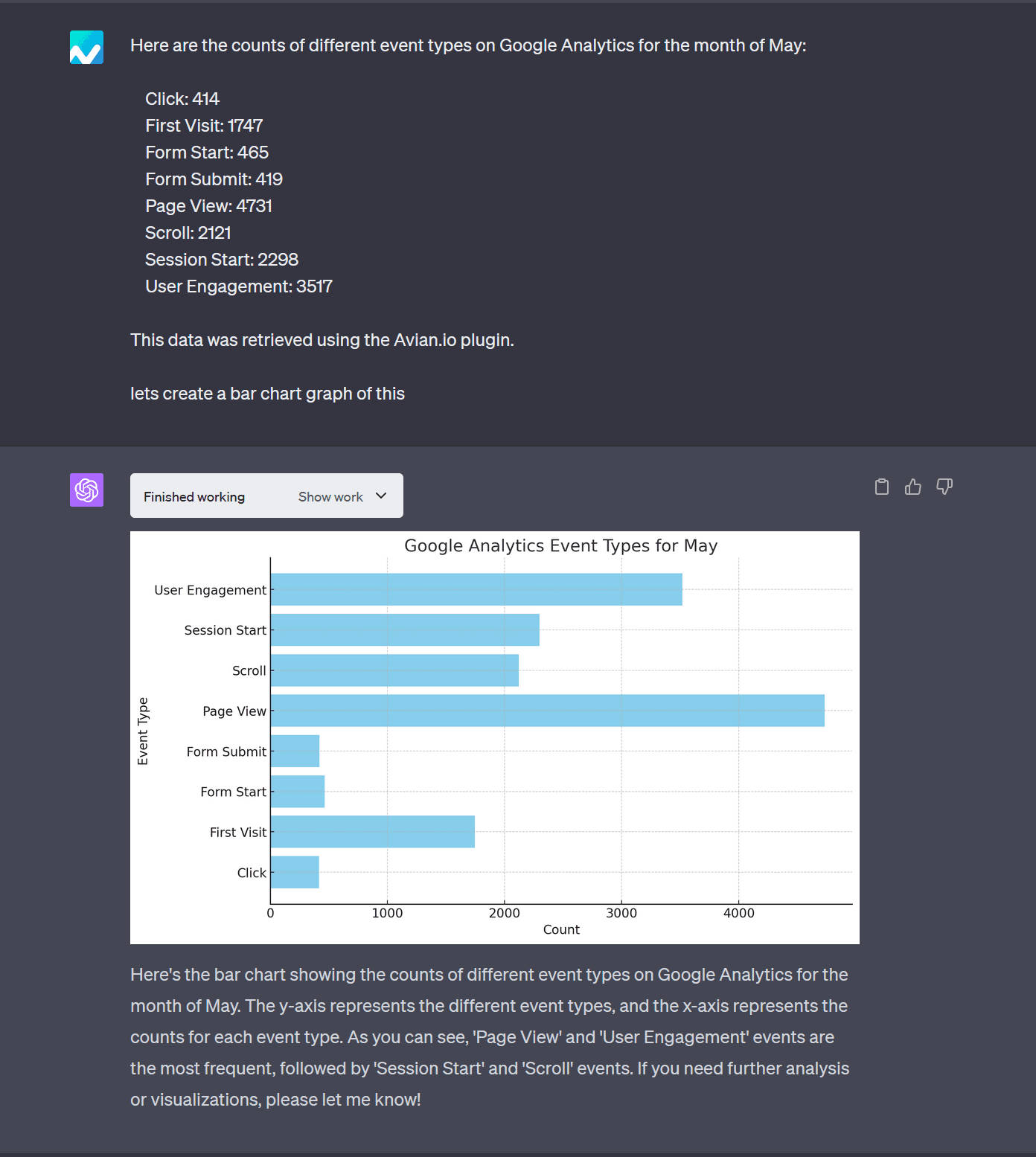

In the context of data analysis, LLMs equipped with code interpreters can perform a range of tasks, from cleaning datasets to applying statistical methods for deriving insights. By running code that automates data wrangling processes—such as filtering, sorting, or aggregating large datasets—LLMs can help simplify workflows.

Analysts can ask LLMs to run operations on a dataset, such as generating summary statistics or creating pivot tables, and the code interpreter will execute the required Python or R scripts to deliver the result. LLMs can also generate and execute code to visualize data. They can create bar charts, line graphs, or scatter plots using libraries like Matplotlib or Seaborn in Python.

Solving Mathematical Problems

LLMs use code interpreters to handle complex mathematical operations that go beyond basic reasoning capabilities. By running code in real time, LLMs can generate solutions for algebraic equations, calculus problems, and statistical analyses. The code interpreter can execute Python code, for example, to solve matrix operations, optimize functions, or compute integrals.

Converting Files Between Formats

Code interpreters enable LLMs to convert files between different formats, which is a common need in both personal and professional settings. Users can request an LLM to transform a CSV file into a JSON format, convert Excel sheets into XML, or extract text from PDFs. The LLM, via its code interpreter, will generate and run the appropriate code to perform these conversions.

Notable Code Interpreter Tools {#notable-code-interpreter-tools}

1. OpenAI Code Interpreter

OpenAI Code Interpreter allows ChatGPT and GPT Assistants to write and run Python code in a sandboxed execution environment. This tool can process files with diverse data and formatting, generate files with data and images of graphs, and iteratively solve challenging code and math problems. When the Assistant writes code that fails to run, it can attempt to run different code until successful execution.

Features:

- Sandboxed execution environment: Provides a secure space to run Python code.

- Iterative code execution: Allows for multiple attempts to correct and execute code.

- File processing: Supports diverse file types including CSV, PDF, and JSON.

- Data and image generation: Can generate various outputs such as data files and image graphs.

- Real-time debugging: Facilitates immediate feedback for code corrections.

Source: OpenAI

2. Open Interpreter

Open Interpreter allows Large Language Models (LLMs) to run code (Python, JavaScript, Shell, and more) locally. By running $ interpreter in the terminal after installation, users can interact with Open Interpreter through a ChatGPT-like interface, enabling natural-language interaction with their computer’s capabilities.

This tool allows for various tasks such as creating and editing media files, controlling browsers for research, and analyzing large datasets, all while requiring user approval before executing code.

Features:

- Multi-language support: Executes code in Python, JavaScript, Shell, and more.

- Local execution: Runs code locally, providing full access to the internet and any installed package or library.

- Interactive terminal chat: Allows for conversational interaction in the terminal to execute commands and control system functions.

- Approval system: Users must approve code before it runs, ensuring security.

- File and data manipulation: Create and edit photos, videos, PDFs, and perform data analysis.

- Browser control: Automate and control browser activities for research purposes.

- Flexible integration: Easily integrates into Python scripts and supports commands for seamless interaction.

Related content: Read our guide to advanced data analysis ChatGPT (coming soon)

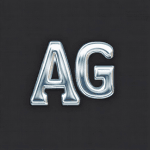

3. AutoGen

AutoGen is an open-source programming framework for building AI agents and enabling cooperation between agents to solve complex tasks. It integrates code interpreters into its multi-agent framework, enabling agents to write and execute code for tasks such as data analysis, mathematical modeling, and debugging.

The interpreters in AutoGen are often deployed in Docker containers or Jupyter servers, where agents can write, test, and debug Python or shell scripts. Available under the CC-BY-4.0 and MIT licenses, it has over 30,000 stars on GitHub.

Features:

- Multi-agent interaction: Agents can collaborate by sending and receiving code and task messages.

- Code executors: Supports execution of Python and shell scripts, either locally or in a Docker/Jupyter environment.

- Customizable components: Agents can be configured to interact with code, tools, or other agents for complex workflows.

- Extensibility: Smoothly integrates additional agents or tools into workflows.

- Human-in-the-loop: Optional integration allows users to supervise or intervene during agent-driven tasks

Source: Microsoft

4. Bearly Code Interpreter

Bearly Code Interpreter integrates AI into workflows to enhance productivity in tasks such as reading, writing, and content creation. It is used by professionals and institutions like MIT, Google, Bain & Company, Bridgewater, and Dow Chemical.

Features:

- Document interaction: Chat with any document, analyze, and ask questions across attached files for immediate insights.

- Transcription services: Convert audio and video from podcasts, YouTube videos, or meeting recordings into interactive transcripts.

- Real-time web access: Use Google Search engine for enhanced search queries.

- Meeting minutes and key takeaways: Automatically generate meeting minutes and highlight key points using language models.

- Flexible AI model integration: Access various AI models, including OpenAI and Claude, to find the most suitable one for specific needs.

- Prompt marketplace: Utilize over 50 reading and writing templates to streamline tasks.

- Cross-platform availability: Available on iOS and as a Chrome extension for easy access to AI tools.

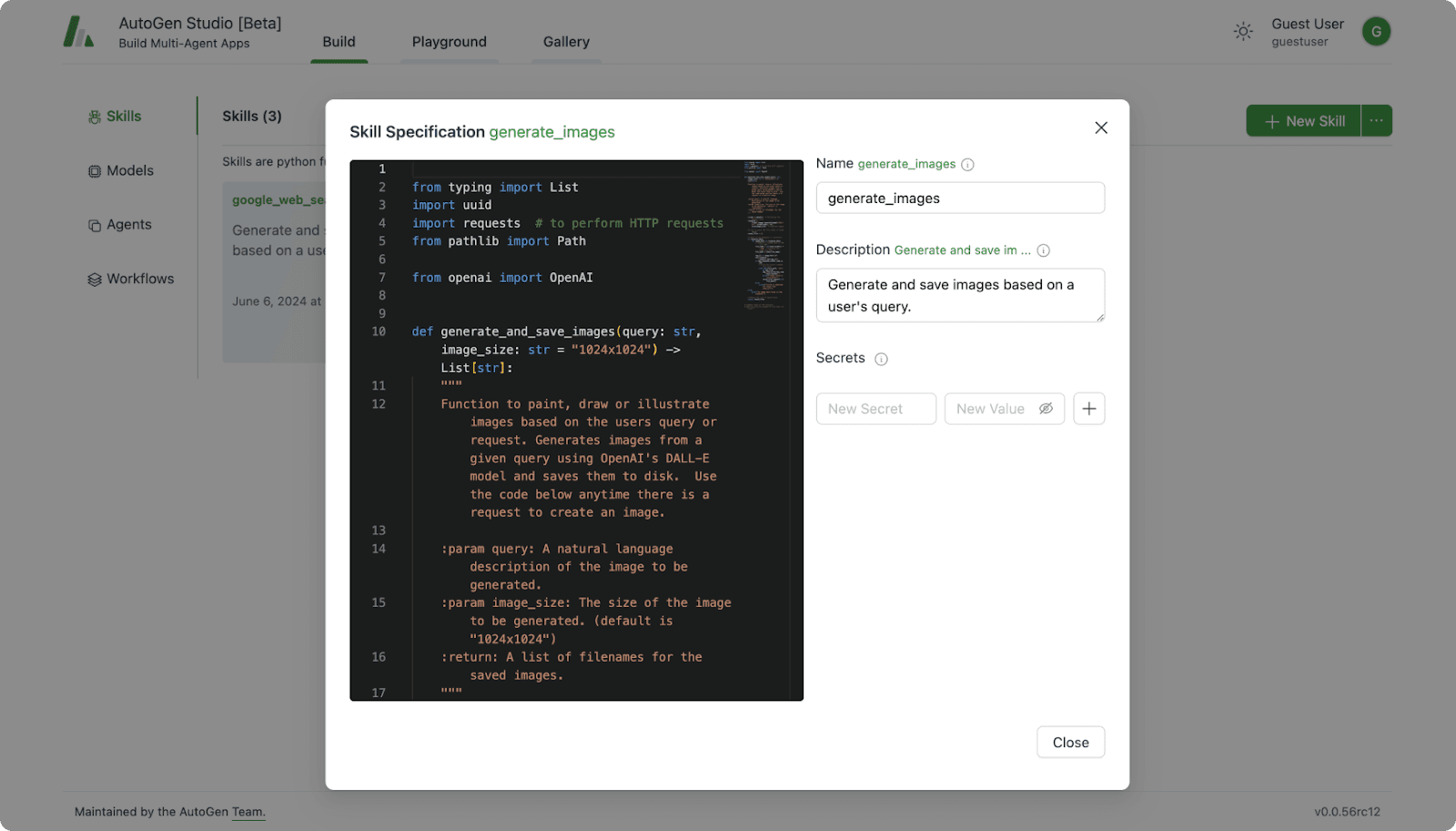

5. Aider

Aider enables pair programming with Large Language Models (LLMs) to edit code within local git repositories. Whether starting a new project or working with an existing git repository, Aider supports integration with GPT-4 and Claude 3.5 Sonnet, among other LLMs.

Features:

- File editing: Run Aider with specific files to edit (e.g.,

aider <file1> <file2> ...). - Change requests: Add new features, test cases, describe bugs, paste error messages, or refactor code.

- Documentation updates: Update documentation within the repository.

- Automatic git commits: Aider commits changes with sensible commit messages.

- Language support: Works with Python, JavaScript, TypeScript, PHP, HTML, CSS, and more.

- Multi-file editing: Handles complex requests across multiple files.

- Repository mapping: Utilizes a map of the entire git repo for efficient operation in larger codebases.

- Real-time sync: Edits files in your editor while chatting with Aider, always using the latest version.

- Enhanced interaction: Add images and URLs to the chat; Aider reads and incorporates their content.

- Voice coding: Supports coding via voice commands.

Source: aider.chat

How to Choose LLM Code Interpreter Tools {#how-to-choose-llm-code-interpreter-tools}

When evaluating code interpreters for language models, consider the following aspects.

Execution Environment

When choosing a code interpreter for LLMs, it’s crucial to assess the execution environment, which defines where and how the code runs. Tools like OpenAI’s Code Interpreter use a sandboxed environment to limit system access. This is useful for running Python scripts for data analysis, file manipulation, or math computations, without exposing sensitive system functions.

Interpreters like Open Interpreter allow local execution, providing greater flexibility. This gives users access to all installed libraries and packages on their machines but requires more caution since the code has full access to system resources, making it better suited for advanced users needing broader control over their computational environment.

Real-Time Feedback

Real-time feedback is crucial during tasks like debugging or iterative code development. Tools like OpenAI Code Interpreter offer immediate feedback by running code interactively and suggesting corrections, making it easier to identify and fix errors as they occur. This is particularly useful when handling complex tasks such as data visualizations or file conversions.

Tools like Bearly and Open Interpreter provide conversational interaction where users can refine commands and adjust code in real time. This feedback loop is useful for fast debugging, testing hypotheses, and improving code accuracy on the fly, supporting developers and analysts.

Language Support

The range of programming languages supported by a code interpreter can affect its versatility. Tools like Open Interpreter provide multi-language support, enabling users to run Python, JavaScript, and Shell scripts, among others. This flexibility is suited for users working in different environments or needing to switch between tasks such as web development and data science.

Other tools, like Aider, focus primarily on programming languages used in web development and data analysis (e.g., Python, JavaScript, TypeScript). When selecting an interpreter, it’s important to choose one that aligns with the languages used in the project.

Security

Security is a top consideration when running code, especially in local or cloud environments. Sandboxed interpreters like those provided by OpenAI ensure that the code runs in an isolated environment, preventing unauthorized access to system resources. This is particularly important for users handling sensitive data or working in regulated industries where security is paramount.

For tools like Open Interpreter, which run code locally, an approval system is in place to mitigate risks. Users must manually approve the execution of each code block, ensuring that malicious or harmful code doesn’t run inadvertently. This layer of control is crucial for balancing flexibility with safety when using LLM-powered interpreters on local machines.

Build with LLM Code Interpreters and Acorn

Visit https://gptscript.ai to download GPTScript and start building today. As we expand on the capabilities with GPTScript, we are also expanding our list of tools. With these tools, you can create any application imaginable: check out tools.gptscript.ai to get started.

See Additional Guides on Key AI Technology Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of AI technology.

AI Cyber Security

Authored by Exabeam

- AI Cyber Security: Securing AI Systems Against Cyber Threats

- AI Regulations and LLM Regulations: Past, Present, and Future

- Artificial Intelligence (AI) vs. Machine Learning (ML): Key Differences and

Examples

Open Source AI

Authored by Instaclustr

- Top 10 open source databases: Detailed feature comparison

- Open source AI tools: Pros and cons, types, and top 10 projects

- Open source data platform: Architecture and top 10 tools to know

Vector Database

Authored by Instaclustr