What Is OpenAI GPT-4?

OpenAI GPT-4, or Generative Pre-trained Transformer 4, represents the latest iteration in OpenAI’s series of large language models (LLMs), designed to understand and generate human-like text based on prompts. This model builds on the capabilities of its predecessors, enhancing its ability to handle more nuanced and complex language tasks. As of the time of this writing, GPT-4 is the world’s most capable LLM in most functional benchmarks.

In addition, GPT-4 significantly increased the amount of text that could be processed in a single conversation (the context window). The initial release of the model provided a context window of 32,000 tokens, and has since been increased to 128,000 tokens.

The development of GPT-4 has been marked by improvements in both the scale of training data and the refinement of model architecture. Like its predecessors, the model supports a broad range of applications from writing assistance, coding help to detailed creative content generation, enabling new applications across industries.

While OpenAI’s previous model, GPT-3.5, is offered free as part of the ChatGPT interface, GPT-4 is a paid offering, available for a monthly subscription fee as part of the Plus, Team, and Enterprise editions of ChatGPT, or can be accessed via the OpenAI API with on-demand pricing. This is part of an extensive series of guides about machine learning.

Understanding GPT-4 Architecture

The architecture of GPT-4 marks a significant departure from previous models by adopting a mixture of experts (MoE) design, which enhances both scalability and specialization. Unlike the standard transformer approach, the MoE model comprises multiple expert neural networks, each specialized in specific tasks or data types. This structure allows GPT-4 to handle complex queries more efficiently.

According to industry reports, training GPT-4 required substantial computational resources, utilizing around 25,000 NVIDIA A100 GPUs over 90 to 100 days and a dataset of approximately 13 trillion tokens. For inference, the model operates on clusters of 128 A100 GPUs.

Key aspects of GPT-4’s architecture include:

- Total parameters: Industry experts estimate that GPT-4 uses approximately 1.8 trillion parameters, over ten times more than GPT-3.

- Model composition: The model consists of 16 expert models, each with approx. 100 billion parameters. For any given inference query, two expert models are activated. The model uses around 50 billion shared parameters for attention mechanisms.

- Inference efficiency: During inference, only a subset of the model’s parameters is utilized, amounting to about 280 billion parameters per query.

The MoE design offers two primary benefits:

- Scalability: By routing inference through only the relevant expert models, the overall system can scale significantly without prohibitive inference costs.

- Specialization: Each expert model can develop specialized knowledge, enhancing the model’s overall capabilities and performance.

OpenAI GPT-3.5 vs GPT-4: What Are the Differences?

Related content: Read our guide to GPT-3 vs GPT-4.

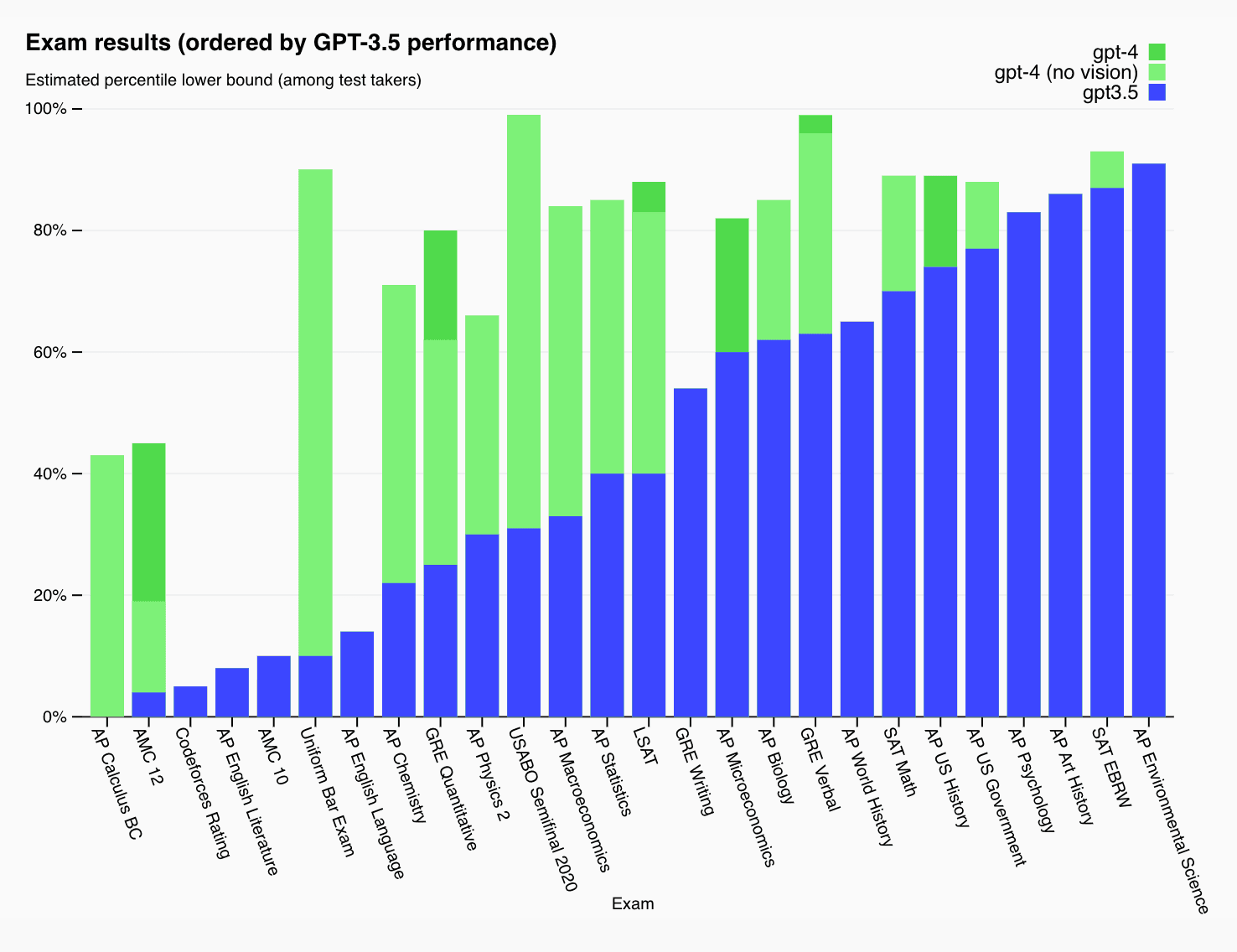

GPT-4, OpenAI’s latest language model, surpasses GPT-3.5 in various benchmarks and practical applications. One of the key improvements in GPT-4 is its enhanced performance in professional and academic tasks. For instance, GPT-4 scores in the top 10% of simulated bar exam takers, whereas GPT-3.5 scores in the bottom 10%.

Image credit: OpenAI

In addition to performance benchmarks, GPT-4 exhibits better steerability and factual accuracy compared to GPT-3.5. The model has undergone rigorous adversarial testing, resulting in a 40% reduction in factual errors relative to GPT-3.5. This improvement is crucial for applications requiring high reliability and precision.

Another notable difference is the multimodal capability of GPT-4. Unlike GPT-3.5, which is limited to text inputs, GPT-4 can process both text and image inputs. This functionality expands the range of tasks GPT-4 can handle, including those involving visual information, such as analyzing diagrams or images interspersed with text.

GPT-4 also introduces advancements in training infrastructure. OpenAI co-designed a supercomputer with Azure to support GPT-4’s training, leading to improved stability and predictability in the training process. These enhancements not only improve the model’s performance but also its scalability and efficiency.

GPT-4 Model Editions

Following the original release of GPT-4 in March, 2023, OpenAI has released two newer editions with improved capabilities, GPT-4 Turbo and GPT-4o. The legacy GPT-4 model is still available for access over API.

GPT-4 Turbo

GPT-4 Turbo is a high-performance variant of the original GPT-4, designed to deliver faster and more efficient processing. It achieves this by optimizing the underlying architecture and leveraging advanced hardware accelerations.

This edition maintains the same capabilities as GPT-4 but offers improved response times, making it suitable for real-time applications where latency is critical.

Importantly, GPT-4 turbo has a knowledge cut-off date of March 2024 (compared to January 2022 for the original GPT-4), meaning that the model is able to provide information for more recent world events.

GPT-4o

GPT-4o (“o” for “omni”) represents a significant advancement in human-computer interaction, integrating multiple input and output modalities. This model can process text, audio, image, and video inputs, and generate text, audio, and image outputs. With a significantly improved response time of approx. 200 milliseconds for audio inputs, it approaches human conversational speed.

A major innovation in GPT-4o is its unified model approach, which contrasts with previous models that used separate pipelines for different tasks. In the past, Voice Mode in GPT-3.5 and GPT-4 involved three distinct models for transcribing audio to text, processing the text, and converting text back to audio. This separation meant loss of nuanced information like tone and background noises. By contrast, GPT-4o processes all input and output through a single neural network, enabling more natural and expressive interactions, including recognizing multiple speakers and emotional content.

In terms of performance, GPT-4o matches GPT-4 Turbo for text in English and code, with notable improvements in multilingual, audio, and vision tasks. It is also faster and 50% cheaper to use via API. GPT-4o’s vision and audio understanding are significantly enhanced, setting new benchmarks in these areas.

In addition, GPT-4o’s knowledge cutoff has been updated to September, 2023.

Learn more in our detailed guide to OpenAI GPT models (coming soon)

GPT-4 Interfaces

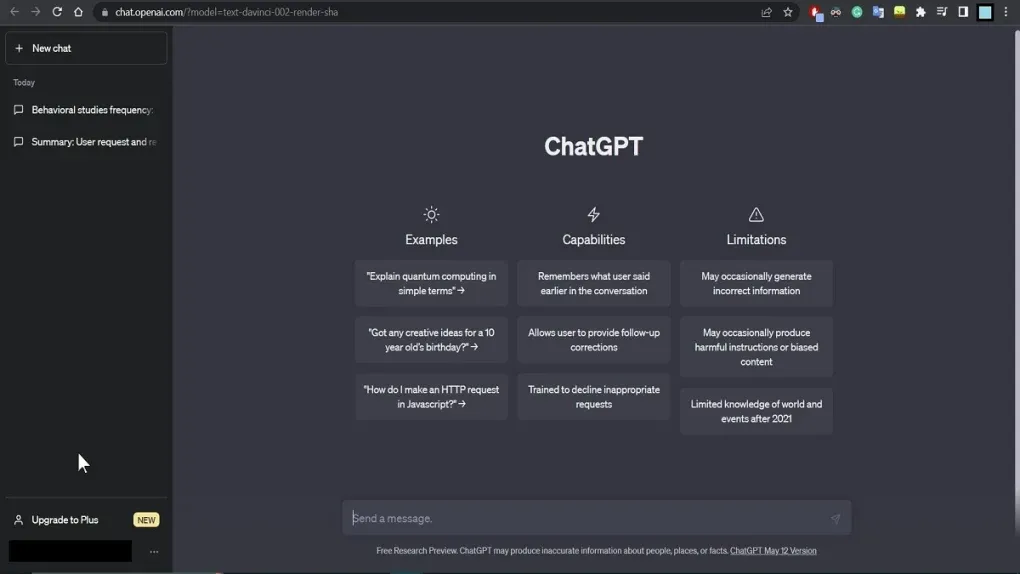

ChatGPT Web Application

The ChatGPT web application provides a user-friendly platform for accessing GPT-4’s capabilities. Users can interact with the model directly through their web browsers, typing prompts and receiving text-based responses in real-time.

The web application also includes features such as conversation history, customer instructions, which modifies the ‘system message’ that instructs GPT-4 how to respond to queries, and memory, which allows the model to remember details from previous interactions.

Direct link: https://chatgpt.com/

Source: OpenAI

ChatGPT Mobile Application

The ChatGPT mobile application brings GPT-4 to smartphones and tablets, allowing users to access its features on the go. This app offers the same functionality as the web application, including real-time text generation and conversation history. It is optimized for mobile devices, and with the release of GPT-4o, provides improved voice interaction capabilities.

How to access: Android Play Store / iPhone App Store

Source: OpenAI

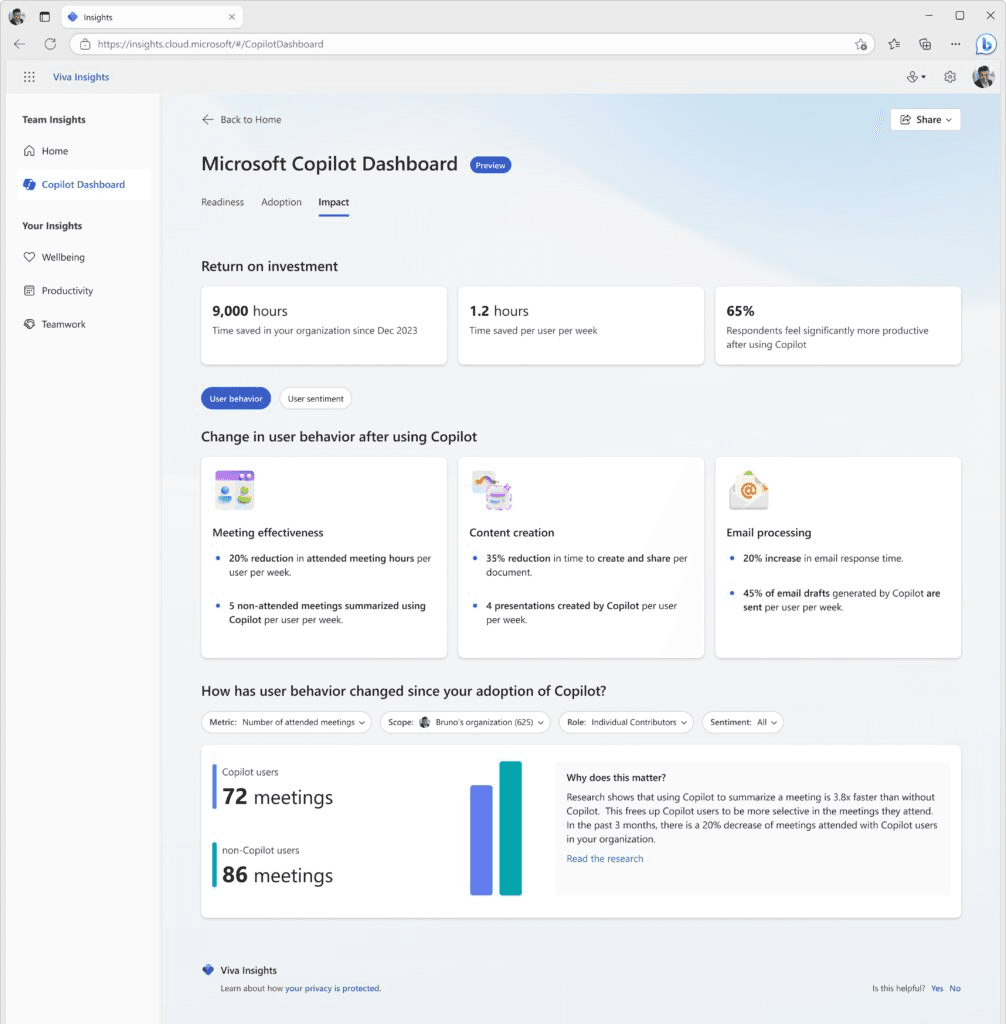

GPT-4 in Microsoft Copilot

GPT-4 is integrated into Microsoft’s suite of productivity tools as part of the Microsoft Copilot feature. This integration allows users to use GPT-4 capabilities within applications like Word, Excel, and Outlook, as well as Microsoft’s search engine, Bing. Microsoft Copilot leverages GPT-4 to assist with tasks such as drafting documents, analyzing data, generating email responses, and responding to natural language queries within the Bing search interface.

Direct link: https://copilot.microsoft.com/

Source: Microsoft

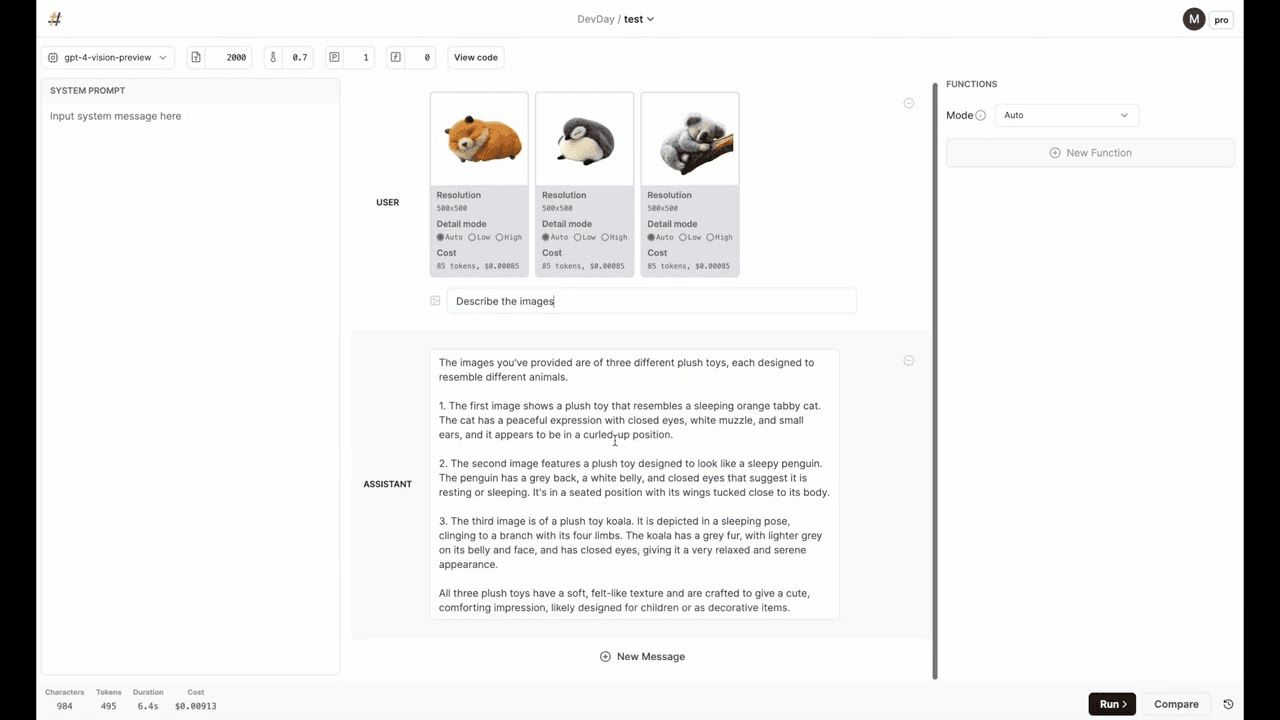

OpenAI Playground

The OpenAI GPT-4 Playground is an interactive web platform where users can experiment with GPT-4’s capabilities. This tool provides a user-friendly interface where one can input prompts and receive responses generated by GPT-4. The playground also allows developers to experiment with AI Assistants, each with its own ‘system prompt’ that defines how GPT-4 should respond to user queries.

Direct link: https://platform.openai.com/playground

Source: OpenAI

OpenAI API

The OpenAI API provides developers with flexible access to GPT-4’s capabilities, enabling the integration of advanced language processing into various applications. Developers can customize the model’s behavior using internal parameters such as temperature, system prompts, and advanced prompt engineering strategies.

Learn more: OpenAI API Documentation

GPT-4 Consumer Pricing (via ChatGPT)

OpenAI offers several subscription plans for GPT-4, designed to meet different user needs:

Free Plan

As of May 13, 2024, OpenAI has announced that the free plan of ChatGPT will provide access to its latest model, GPT-4o. Previously, the free version offered the GPT-3.5 Turbo model.

Plus Plan

Cost: $20 per month

Includes access to GPT-4 within the ChatGPT interface. Offers a higher usage cap and priority access during peak times compared to the free tier, which only includes GPT-3.5.

Team Edition

Cost: $30 per user per month, $35 per user per month for an annual subscription

Designed for small to medium-sized teams. Includes additional collaboration and security features, as well as higher usage limits. Billed on a per-user basis.

Enterprise Plan

Offers customizable plans for larger organizations. Features extensive usage allowances, enhanced security, and dedicated support. Pricing varies based on specific needs and scale of deployment.

Learn more in our detailed guide to OpenAI GPT-4 pricing (coming soon)

GPT-4 API Pricing

OpenAI makes GPT-4 accessible via its API. The cost varies based on the model and context length capabilities.

- Models with a 128k context length, like GPT-4 Turbo, are priced at $10.00 per 1 million prompt tokens, equivalent to $0.01 per 1,000 prompt tokens. The cost for sampled tokens in these models is $30.00 per 1 million, or $0.03 per 1,000 tokens.

- Models offering a 32k context length, like GPT-4-32k and GPT-4-32k-0314, are priced at $60.00 per 1 million prompt tokens ($0.06 per 1K prompt tokens) and $120.00 per 1 million sampled tokens ($0.12 per 1K sampled tokens).

- Models with an 8k context length, such as the standard GPT-4 and GPT-4-0314, are priced at $30.00 per 1 million prompt tokens ($0.03 per 1K prompt tokens) and $60.00 per 1 million sampled tokens ($0.06 per 1K sampled tokens).

GPT-4 vs. Gemini vs. Claude vs. LLaMA vs. Mistral: How Do They Compare?

Performance Benchmarks

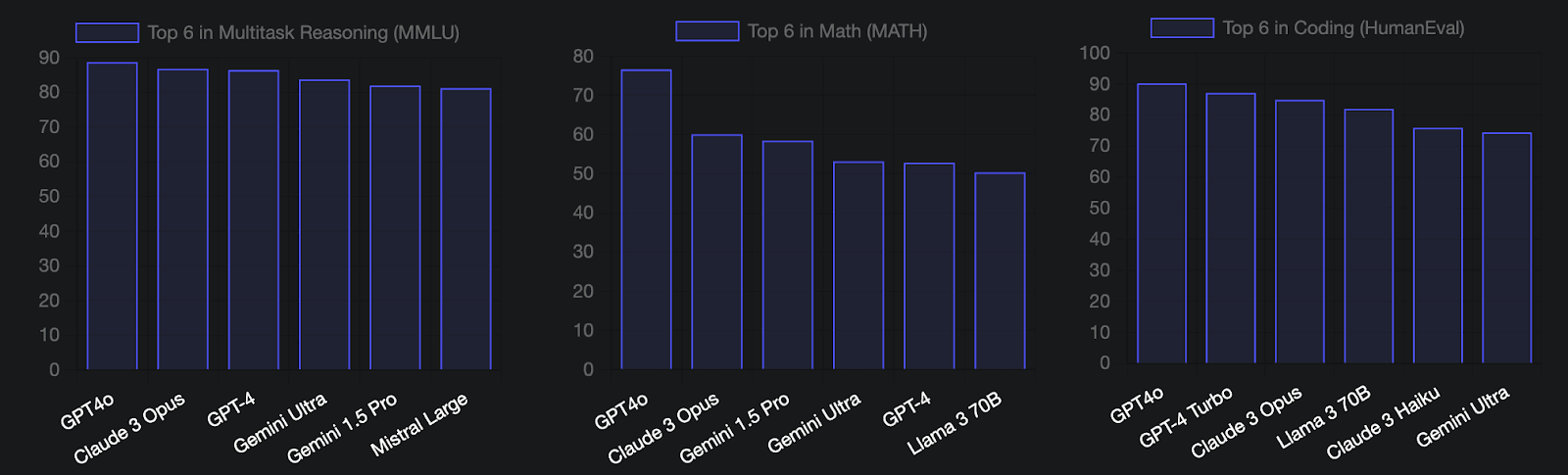

The following image by Vellum summarizes performance benchmarks comparing GPT-4, GPT-4o, Google Gemini 1.5 Pro, Claude 3 Opus, LLaMA 3 70B, and Mistral Large. GPT-4o beats all other models in Multitask Reasoning (MMLU), Math (MATH) and coding (HumanEval).

Image credit: Vellum.ai

Let’s go into more detail into how these models compare.

Context window size

GPT-4 Turbo and GPT-4o provide a context window of 128,000 tokens. It is surpassed by Gemini Pro 1.5, with a context window of 1 million tokens, and Claude 3, which supports 200,000 tokens.

Cost efficiency

As of the time of this writing, Gemini Pro is the most economical at $0.125 per million tokens, followed by Mistral Tiny at $0.15. GPT-3.5 Turbo also offers a competitive rate at $0.50 per million tokens. GPT-4o costs $5 per million tokens, and Claude 3 costs three times more, $15 per million tokens.

Performance benchmarks:

According to an average of 6 performance benchmarks reported by Vellum, Claude 3 Opus has the highest score of 84.83%, leading in MMLU (86.80%) and HumanEval (95.40%). GPT-4, while slightly behind in the average score at 79.45%, performs well in MMLU (86.40%) and HumanEval (95.30%). GPT-4o surpasses Claude 3 Opus in benchmarks where data is available: MMLU, HumanEval, and MATH.

Related content: Read our guide to GPT-4 alternative (coming soon)

Building Applications with GPT-4 and Acorn

Visit https://gptscript.ai to download GPTScript and start building today. As we expand on the capabilities with GPTScript, we are also expanding our list of tools. With these tools, you can create any application imaginable: check out tools.gptscript.ai to get started.

See Additional Guides on Key Machine Learning Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of machine learning.

Auto Image Crop

Authored by Cloudinary

- Auto Image Crop: Use Cases, Features, and Best Practices

- 5 Ways to Crop Images in HTML/CSS

- Cropping Images in Python With Pillow and OpenCV

Multi GPU

Authored by Run.AI

- Multi GPU: An In-Depth Look

- Keras Multi GPU: A Practical Guide

- How to Build Your GPU Cluster: Process and Hardware Options

LLM Security

Authored by Acorn